Jan 6, 2026

Enterprise AI hits a new ceiling: scaling agents now depends on trust, traceability, and governance, reshaping infrastructure, APIs, roles, and operating models beyond raw intelligence in 2026.

.png)

I’ve seen how AI isn’t just a technology issue; it’s a culture issue. At Namasys Analytics, our most profound breakthroughs didn’t come when the code worked flawlessly, but when the Executive Decision-Makers, leadership, and teams committed to experimentation with intelligence, accountability, and learning.

In 2025, the organizations that get this right are outperforming peers, and the differentiator is often how their Executive Decision-Makers oversees AI adoption.

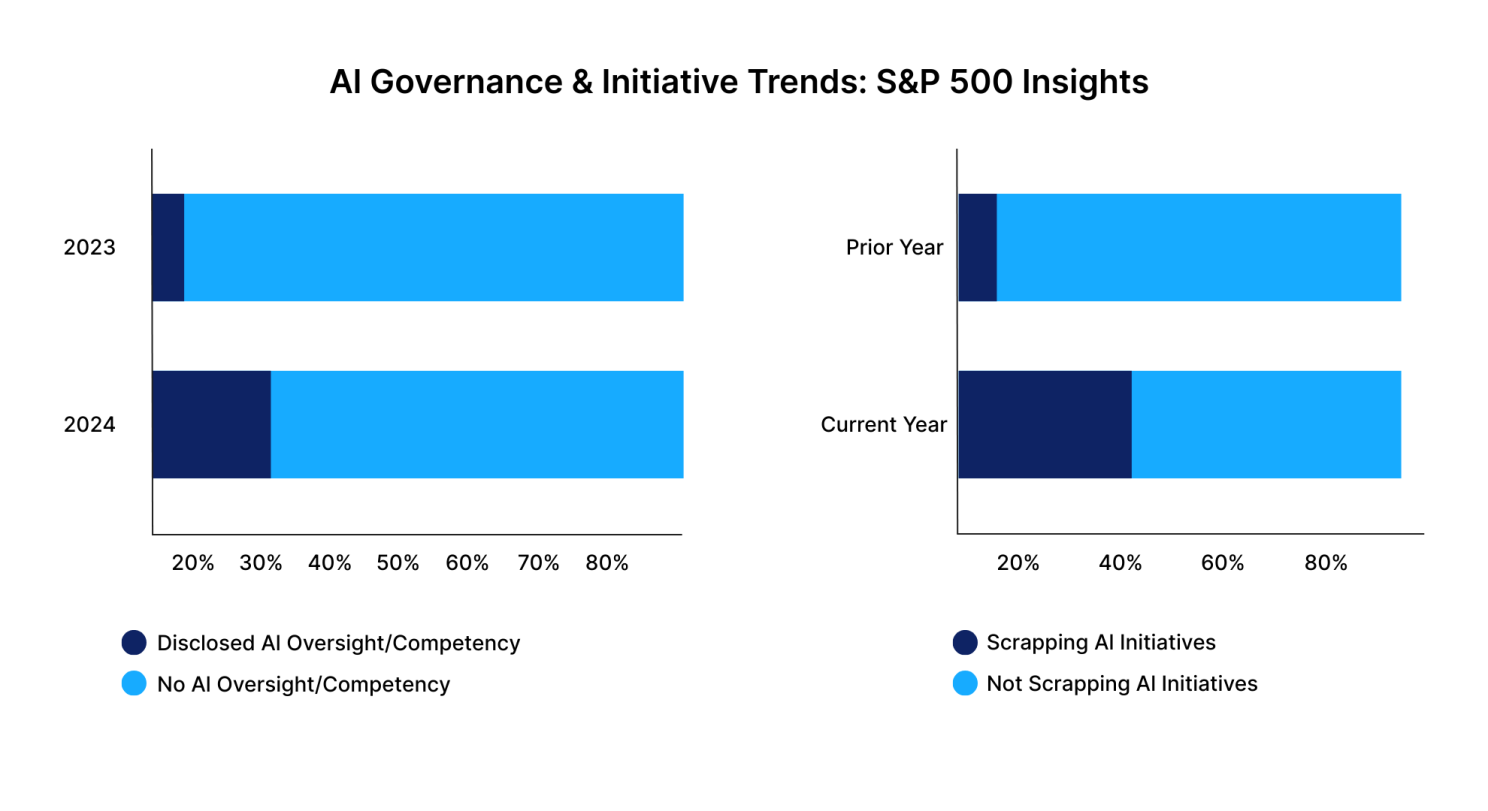

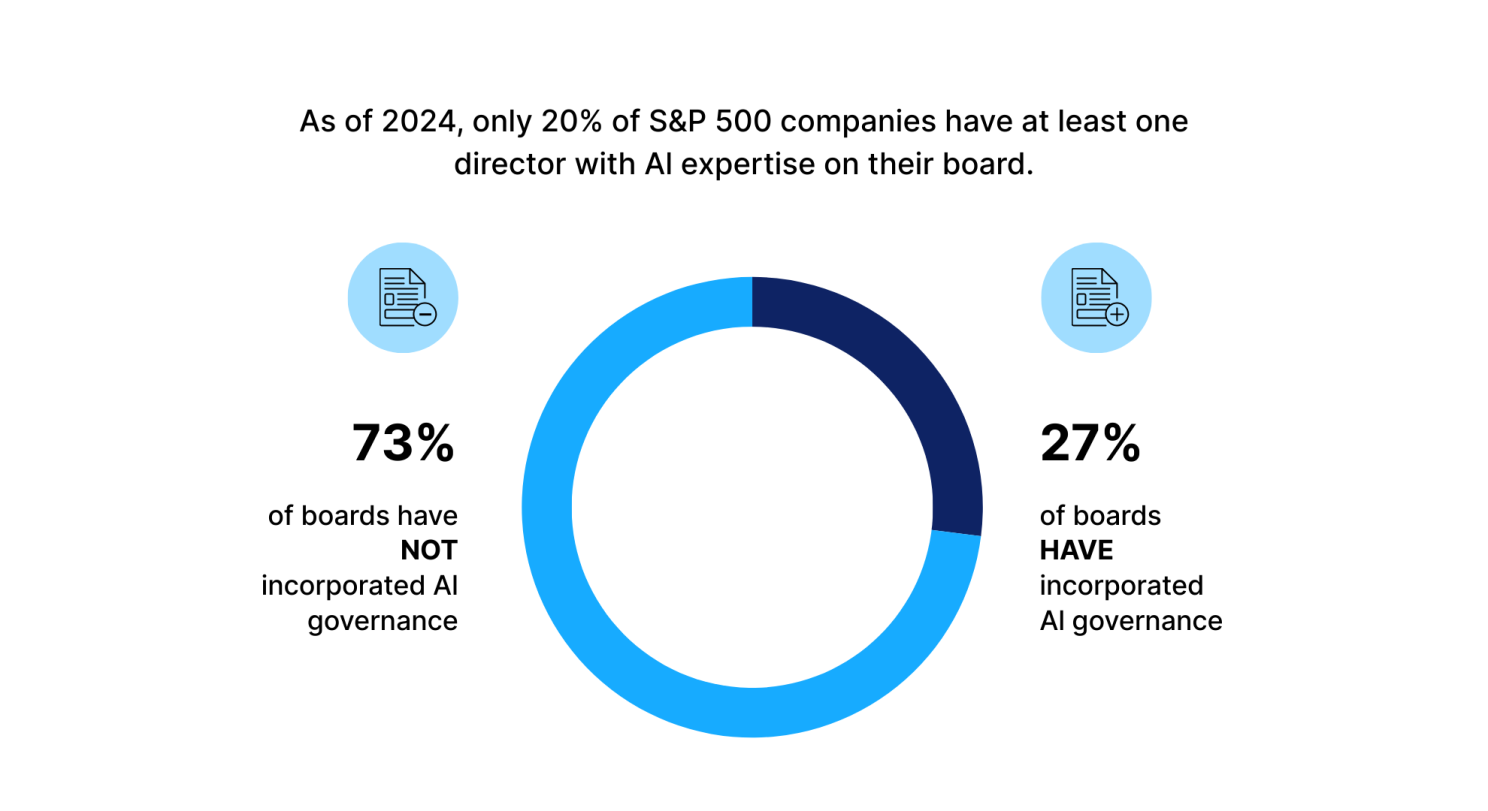

These numbers tell a clear story: adoption is rising, but culture, oversight, risk management, and scale are stumbling blocks.

To bridge that gap, I believe a successful AI-driven culture depends on several pillars.

Educating and involving Executive Decision-Makers is critical to scaling AI adoption.

To address this:

Culture may seem intangible, but these metrics provide clarity and discipline.

Some organizations with mature AI transformation report revenue or profit increases of 30-50% from their AI investments when culture, oversight, and strategy are well aligned. (While specific studies vary, the trend is consistent in the highest performers.)

Here are the institutional levers that work:

Q1. Can small/medium organizations implement this with limited resources?

Yes. Executive oversight can be scaled: one director with AI interest, part-time committee, external advisors. The key is commitment and consistency.

Q2. How long before cultural change shows in KPIs?

Typically, 12-18 months. Early signs: increased experimentation, fewer failed pilots, rising adoption across more departments.

Q3. What is a reliable sign that Executive oversight is working?

When disclosures increase (AI oversight in charters, board members with AI expertise), and when project failures lead to documented learning, not blame.

Q4. How do you balance risk tolerance with regulation / ethics?

By having frameworks, internal and external audits, ethics boards, and building risk-management into the AI governance from day one.

Q5. What data should a Executive Decision-Makers demand from management?

Metrics: pilot-to-production ratio; ROI per AI initiative; risk incidents; bias / fairness audits; employee sentiment regarding AI adoption.

Q6. How do we avoid hype and wastage?

By being very clear on strategy: select use cases with measurable benefit, ensure data readiness, avoid overinvestment in “flashy” projects that don’t align with business value.

In my years leading AI innovation, I’ve observed that technology wins can be fleeting, but cultural wins endure. Organizations that thrive don’t just build models, they build belief. They commit to open experimentation, they allow failure to teach, and they anchor governance in transparency and accountability.

With robust Executive oversight, you change everything: risk becomes managed risk, failures become lessons, AI becomes sustainable advantage. For CEOs and Executive Decision-Makers alike, the question isn't whether to build an AI-driven culture, it’s when. The sooner you do, the greater your lead in value creation.

Jan 6, 2026

Enterprise AI hits a new ceiling: scaling agents now depends on trust, traceability, and governance, reshaping infrastructure, APIs, roles, and operating models beyond raw intelligence in 2026.

Jan 21, 2026

Agentic AI is reshaping enterprises: how 5-person teams outperform departments, why governance matters, and what CXOs must redesign as autonomous agents flatten org structures.

Bring clarity, efficiency, and agility to every department. With Namasys, your teams are empowered by AI that works in sync with enterprise systems and strategy.