Jan 6, 2026

Enterprise AI hits a new ceiling: scaling agents now depends on trust, traceability, and governance, reshaping infrastructure, APIs, roles, and operating models beyond raw intelligence in 2026.

Most data platform failures in 2026 are not technology failures. They are operating model failures, where architecture, governance, and decision ownership are misaligned.

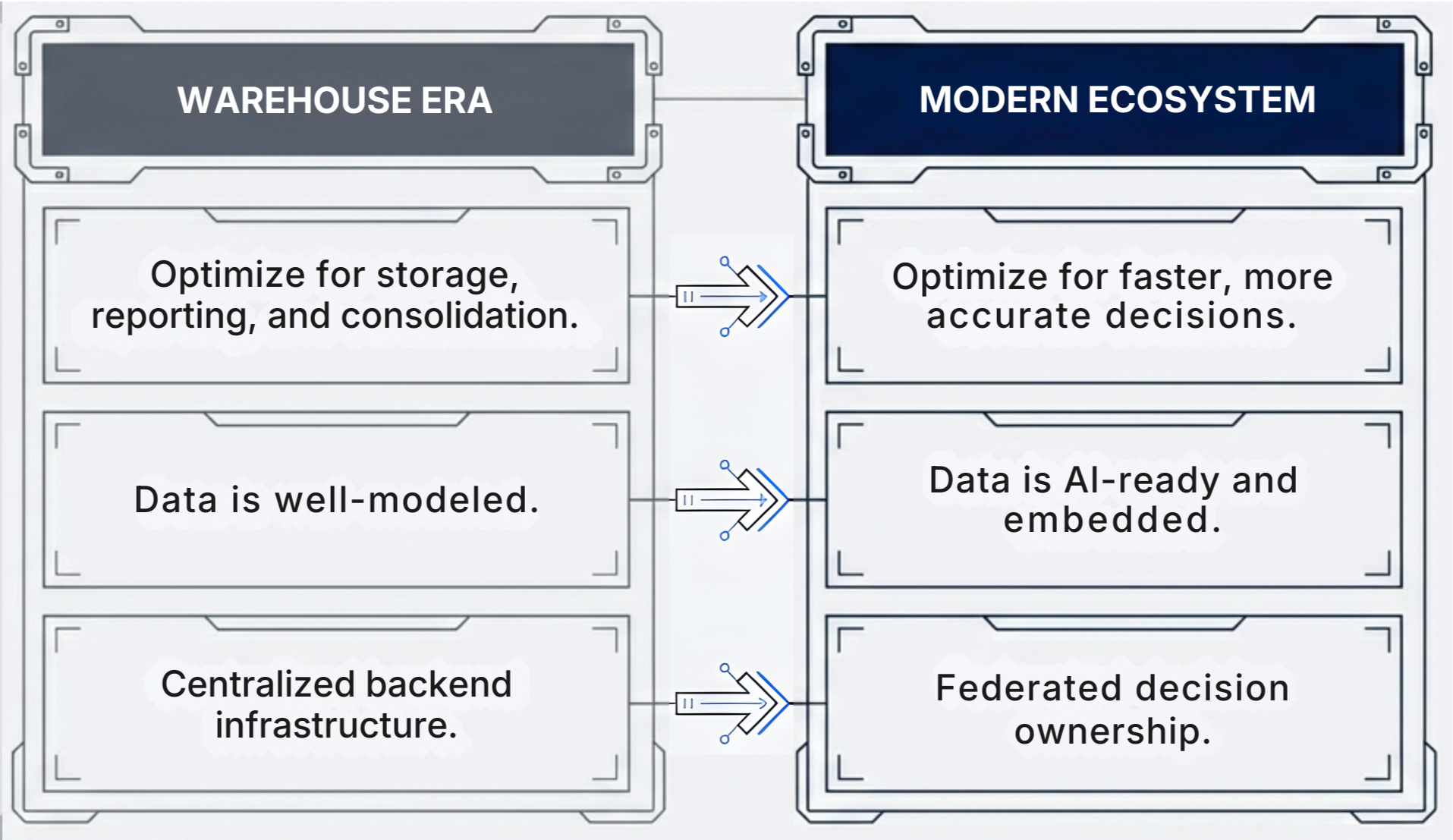

The next generation of data platforms is about how data is owned, governed, and activated, not just where it is stored.

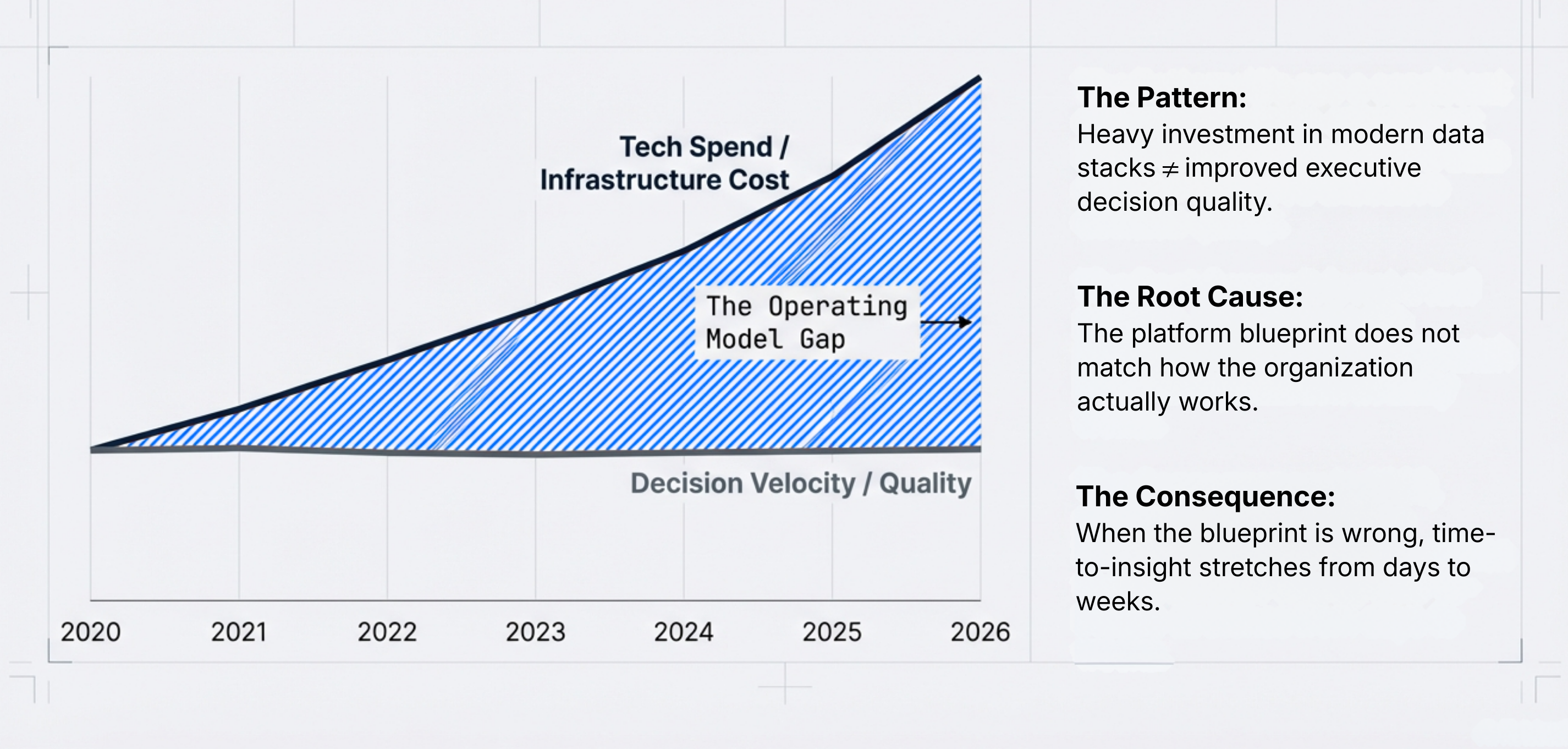

One of the most common patterns we see with large enterprises is: they invest heavily in modern data stacks, yet executive decision quality barely improves.

Not because the tools are wrong. But because the platform blueprint does not match how the organization actually works.

In 2026, data platforms are no longer backend infrastructure. They are the decision backbone of the enterprise. This is why data platforms are evolving structurally.

For years, enterprises optimized data platforms for storage, reporting, and consolidation.

The real constraint is no longer data availability, it is the organization’s ability to convert data into timely, accountable decisions.

This pressure has driven the emergence of new architectural blueprints.

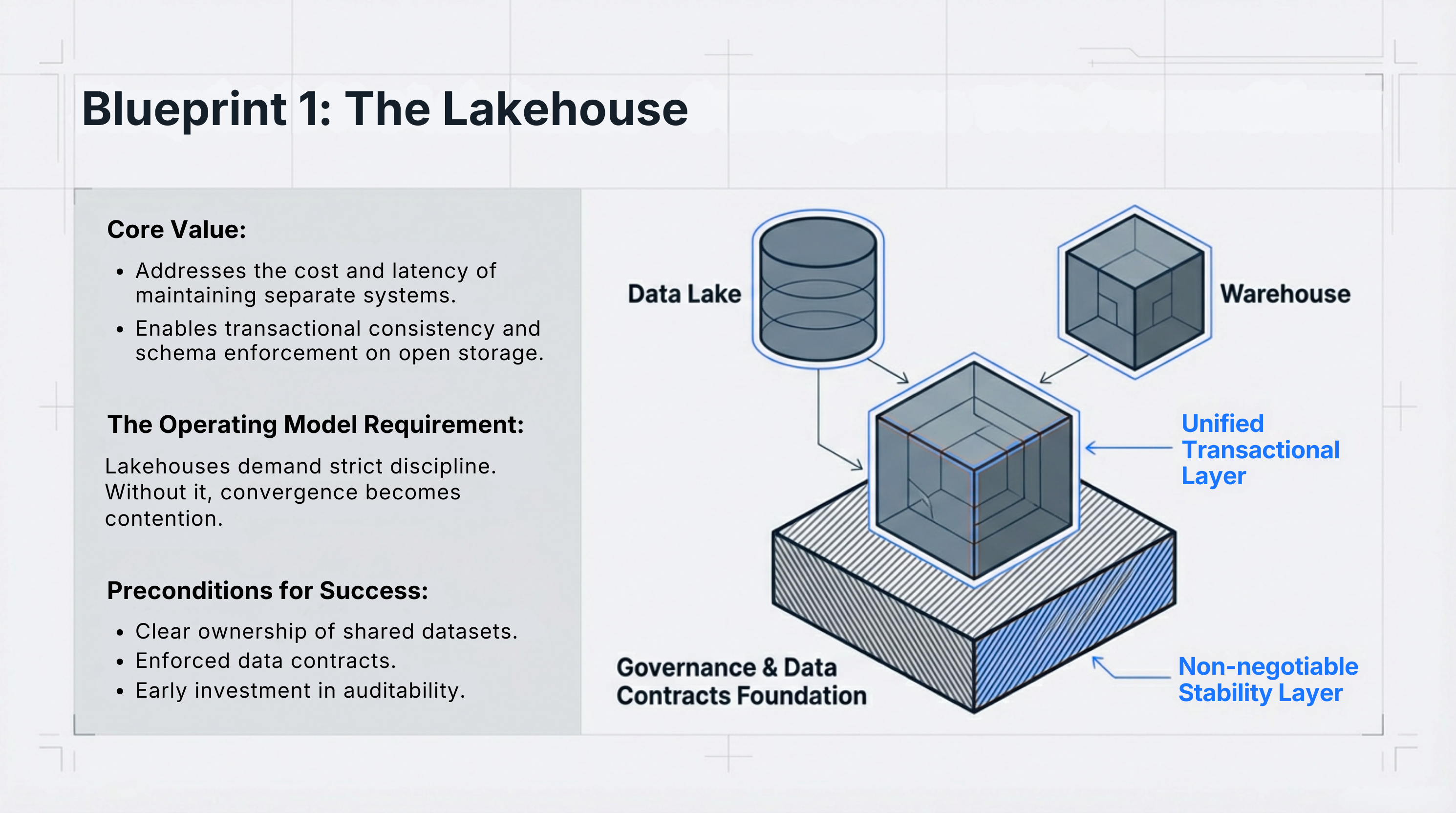

Lakehouse architectures emerged to address a real enterprise problem: the cost, latency, and complexity of maintaining separate data lakes and data warehouses.

By enabling transactional consistency, schema enforcement, and analytics on open storage, lakehouse reduce duplication and simplify analytics pipelines.

However, this convergence comes with non-negotiable preconditions.

Lakehouse are a poor fit where:

Lakehouse are powerful, but only for organizations mature enough to operate a shared data foundation.

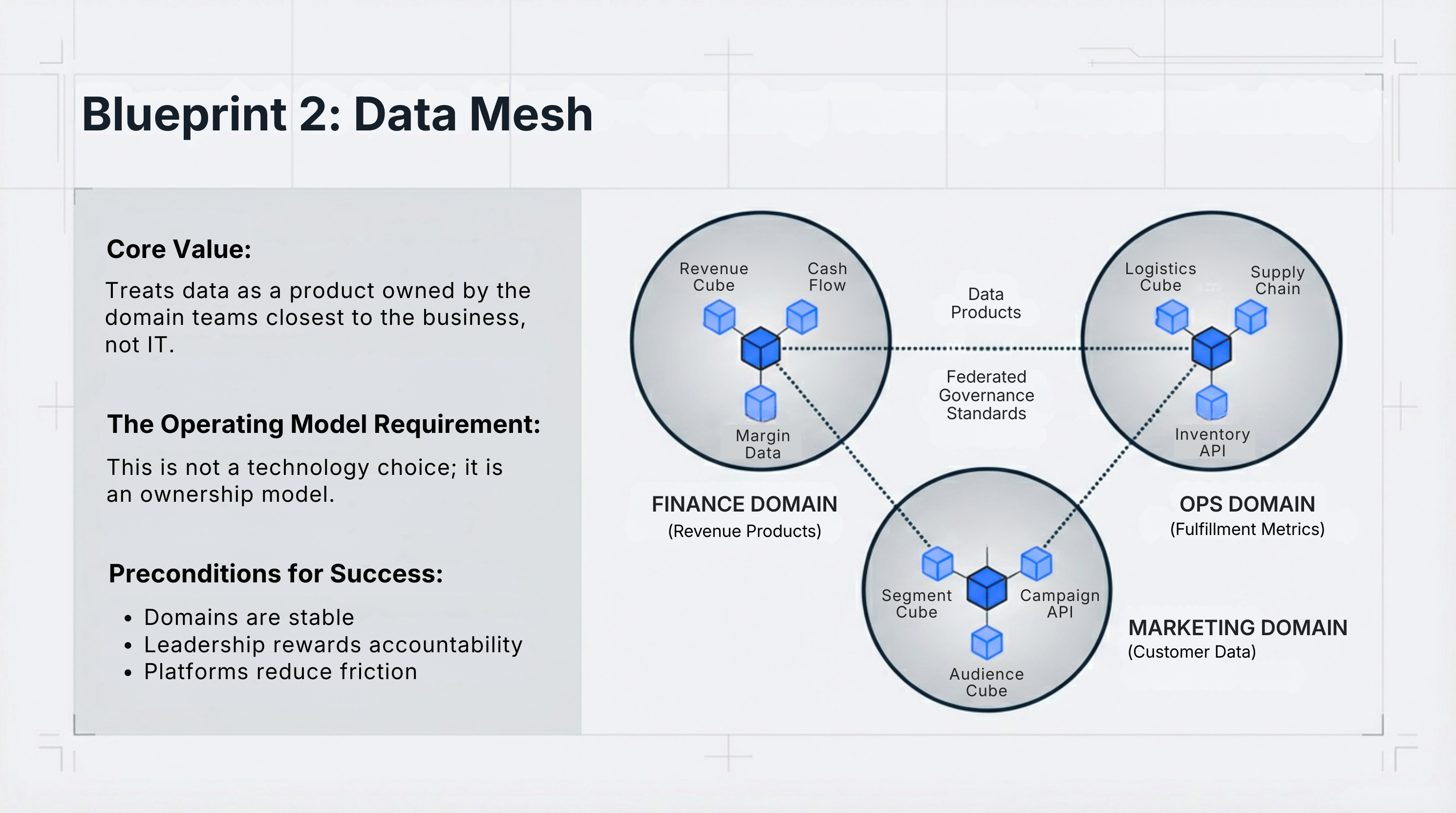

Data mesh is often misunderstood as a technology choice. It is not. It is an operating model for data ownership.

At its core, data mesh treats data as a product, owned by domain teams closest to the business - finance, operations, marketing - with explicit accountability for quality, availability, and usability.

What does “federated governance” look like in practice?

In successful mesh implementations, it includes:

For example, finance may own revenue and margin data products, while operations owns fulfillment and supply-chain metrics. Each publishes trusted datasets governed by shared rules but optimized for their decision context.

Without these conditions, mesh decentralizes inconsistency rather than insight.

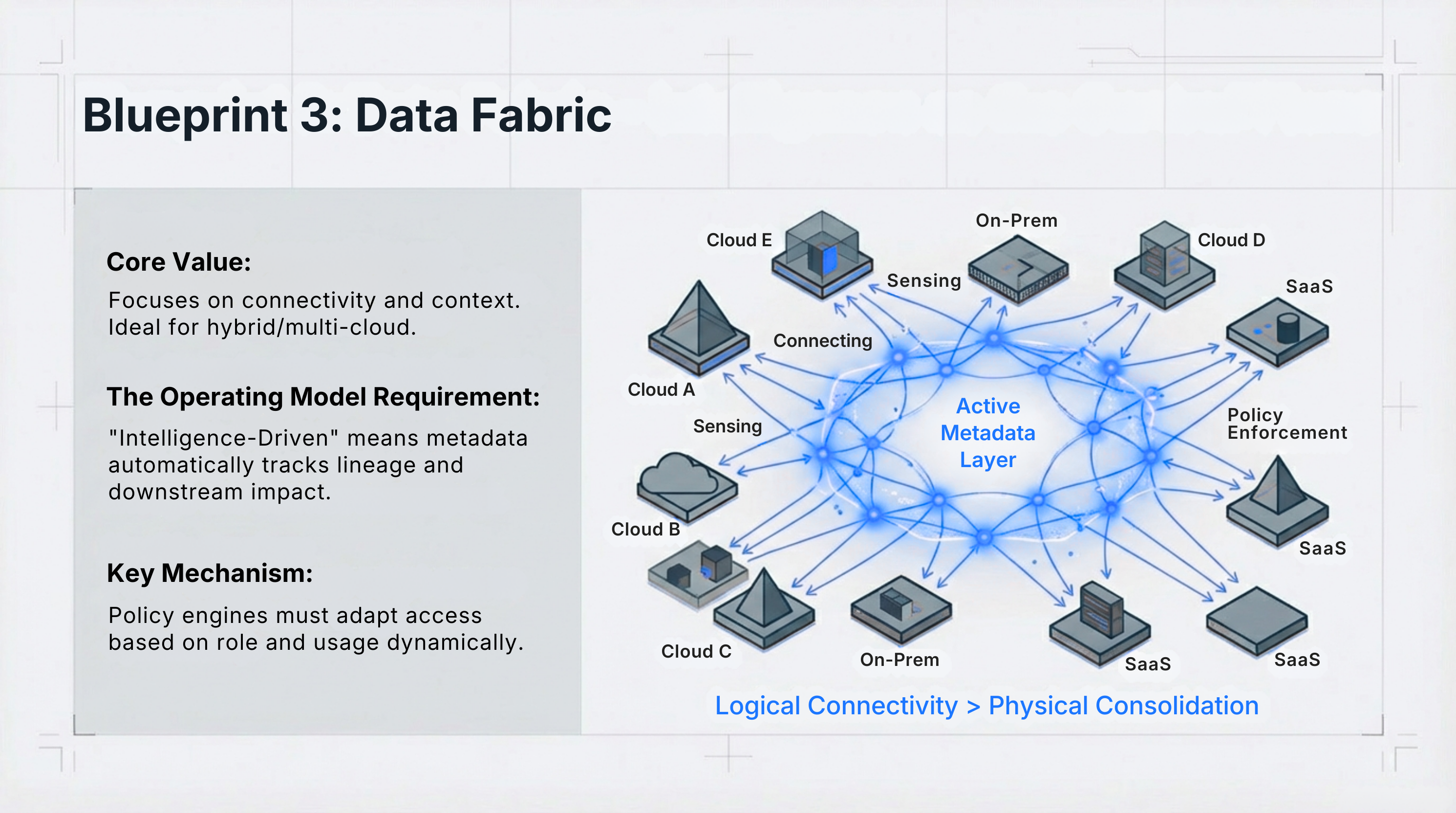

As hybrid and multi-cloud environments become the norm, physical consolidation loses relevance.

Data fabric architectures focus on connectivity, context, and control, using active metadata as the intelligence layer across distributed systems.

This is what “intelligence-driven” means in practice:

Fabric approaches are particularly effective where regulatory, geographic, or operational constraints prevent centralization, but decision consistency is still required.

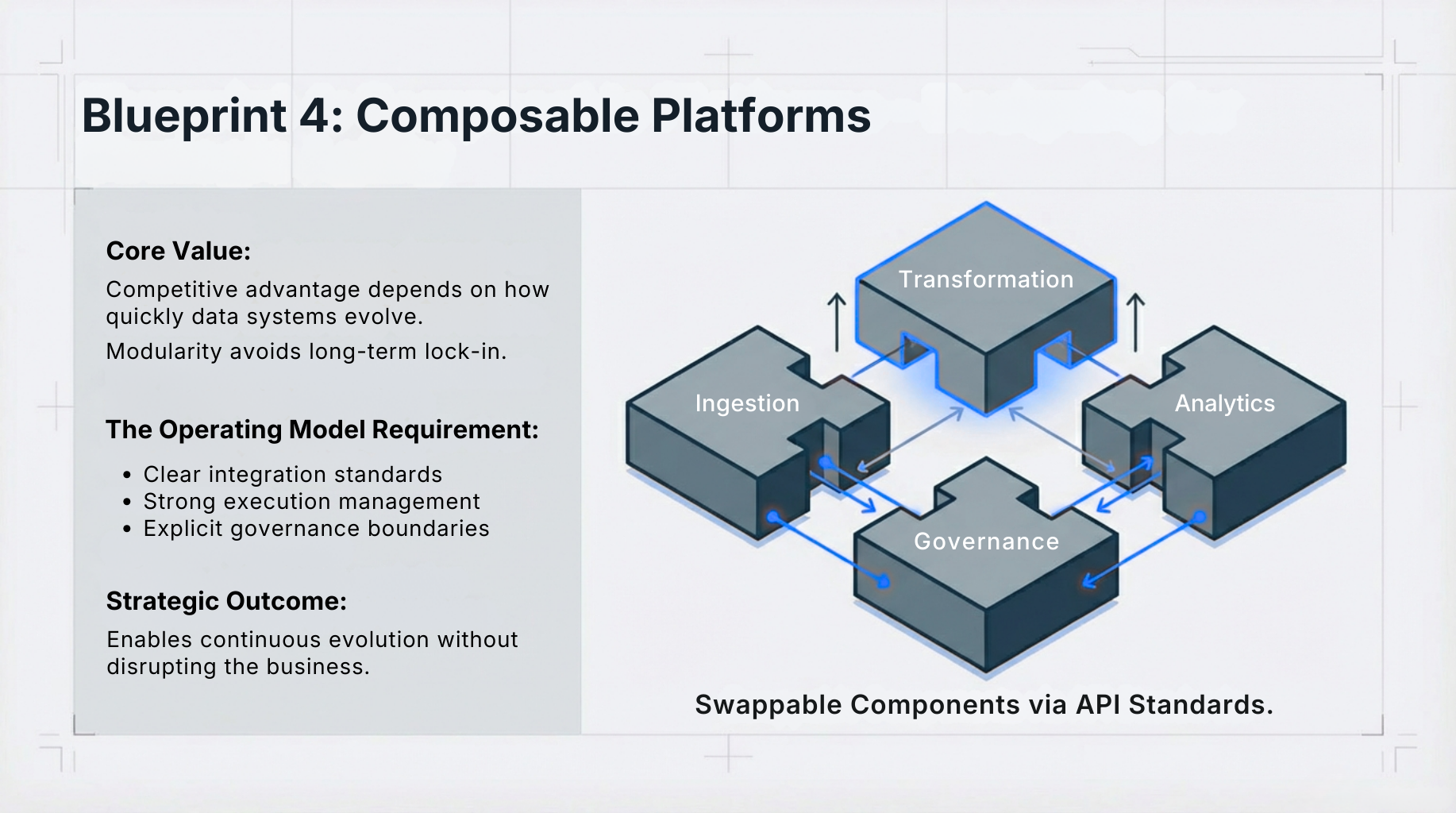

Composable data platforms reflect a strategic reality: competitive advantage now depends on how quickly data systems can evolve with business priorities.

By assembling modular components - ingestion, transformation, governance, analytics - organizations avoid long-term lock-in and enable targeted modernization.

When executed with discipline, composability enables continuous evolution without disruption.

Blueprint misalignment hides cost, duplicates effort, and obscures ROI. The challenge is no longer scale, it is resilience, interoperability, and simplicity under constant change.

Success depends on ownership models and governance automation, not just data quality metrics.

At Namasys Analytics, our consistent observation is: most data programs fail at the blueprint level, not the platform level.

Jan 6, 2026

Enterprise AI hits a new ceiling: scaling agents now depends on trust, traceability, and governance, reshaping infrastructure, APIs, roles, and operating models beyond raw intelligence in 2026.

Jan 21, 2026

Agentic AI is reshaping enterprises: how 5-person teams outperform departments, why governance matters, and what CXOs must redesign as autonomous agents flatten org structures.

Bring clarity, efficiency, and agility to every department. With Namasys, your teams are empowered by AI that works in sync with enterprise systems and strategy.